This post was written by: Fenwick McKelvey, Matthew Tiessen & Luke Simcoe

Are we living in a simulated “reality”? Although a work of science fiction from 1964, the book Simulacron-3 asks a question relevant to our digitally-enabled world. The city where the book takes its name perturbs its inhabitants. Over the course of the novel, it turns out the city is a simulation running on a computer by scientists as a market research experiment. The city is one giant public opinion poll, data-mining the minds of millions of people to help companies and governments make decisions. Though a cautionary tale written in an era of Command and Control and Operations Research, the book accurately describes the modern Internet and our present moment, one that both facilitates our communication and informs market research. The primary purpose of digital media, we contend, is simulation with free communication being merely an appealing side effect or distraction.

The growing mediation of everyday life by the Internet and social media, coupled with Big Data mining and predictive analytics, is turning the Internet into a simulation machine. The collective activity of humanity provides the data that informs the decision making processes of algorithmic systems such as high-frequency trading and aggregated news services that, in turn, are owned by those who wield global power and control: banks, corporations, governments. The Internet is no longer a space primarily of communication, but of simulation. By simulation, we do not mean a reproduction of reality “as it is out there,” but rather a sort of reality-in-parallel, one that generates its own sets of tangible quanta and its own “realities”-to-be-calculated. The issue for our times, then, is not that we Netizens – nor the inhabitants of Simulacron-3 – need to grapple with the idea that we are living fake or fictitious virtual lives; rather, we must come to terms with our own online activities feeding the appetites of algorithmically-driven machines designed to facilitate the expansion of profit and power by quantifying and modulating our desires. We’ve become more valuable to the Internet and its scanbots as aggregate data inputs than we ever were as consumers of banner ads.

The imperative driving today’s Internet and mobile technology has more to do with informing algorithms designed to manage plugged-in populations than the facilitation of free expression. Social media is valuable not because it involves tweets, opinions and desires expressive of the general experience of being alive, but because this data produces a useful resources for finance, business, and government akin to the collective behaviour of Simulacron-3.

Counter-Culture and Control

Digital networks are making real the fantasies of the cyberneticists and information theorists who first developed computing. Computers promised to make the world more stable and more easy to manage. Marshall McLuhan echoed this promise in his famed Playboy interview from 1969:

There’s nothing at all difficult about putting computers in the position where they will be able to conduct carefully orchestrated programing of the sensory life of whole populations. I know it sounds rather science-fictional, but if you understood cybernetics you’d realize we could do it today. The computer could program the media to determine the given messages a people should hear in terms of their over-all needs, creating a total media experience absorbed and patterned by all the senses. We could program five hours less of TV in Italy to promote the reading of newspapers during an election, or lay on an additional 25 hours of TV in Venezuela to cool down the tribal temperature raised by radio the preceding month. By such orchestrated interplay of all media, whole cultures could now be programed in order to improve and stabilize their emotional climate, just as we are beginning to learn how to maintain equilibrium among the world’s competing economies.

Computers, McLuhan understood, had the potential to observe and intervene in the global emotional zeitgeist. Digital simulation blankets the earth, keeping us warm and cozy in the face of an unstable atmosphere. McLuhan’s idea that the world could reach equilibrium echoed Norbert Wiener who called for cybernetic systems integrating humans and machines as a way, at once, to avoid entropy and achieve homeostasis. Wiener’s dreams also resonated with those of Buckminister Fuller who sought as a generalist to create systems that could make the world a better place. Such an idea drove the early work on digital computing as described by Paul Edwards who characterized the Semi-Automatic Ground Environment as a way to make the world more predictable and manageable.

When first introduced, books like Simulacron-3 described the managerial promise of computing as a dystopia. The worry was that computers would become the next “Big Brother.” Fred Turner begins his important history of computer culture with students burning IBM punch cards because they did not want to be part of the machine. His book, however, traced the evolution of computing from symbolizing totalizing control to personal computers symbolizing personal liberation.

Digital computer networks still promise stability through simulation, but this “closed world” promise has been refracted through the counterculture and the cyberculture described by Fred Turner. Resistance to the 1960s formation of the cybernetic dream has ironically allowed a re-iteration of the same premise in the modern age. Personal computers, it’s commonly held, allow humans to express themselves more freely. We won’t argue with that claim, but let’s not forget that this “free expression” is allowed to persist only to nourish data aggregators and simulators.

Our position is similar to that of Darin Barney who described the Internet as a standing reserve of bits. Digital mediation and communication transforms social activity and information into a Heideggerian “standing reserve” that can be consumed single-mindedly as a resource. While Barney wrote his work before the emergence of social media and big data, he nevertheless foreshadows our present, one where ever-growing troves of human communication data reside in global server farms as standing reserves of data. This phenomenon might become clear with a few examples.

These days over 80 per cent of stock trading on Western financial markets is done by computer algorithms that make decisions in nanoseconds as we’ve discussed previously. These decisions to trade result not from human intuition (let alone discernments of such fuddy-duddy things such as “value”), but from the “logic” embedded in these algorithms; as they feed upon a variety of information sources as inputs, they output decisions of what to buy and sell. It is becoming more apparent that the Internet has been wired as an input for these decisions. For example, the BBC reports that Google searches can predict stock market decisions. Indeed, United Airlines lost most of its stock value in 2008 when the Google News aggregator mistakenly categorized a six-year old “news” story as current. The story discussed the insolvency of the airline – long resolved – so investors or more likely machines reacted promptly by dumping its stock. More recently, hackers breached the Twitter account of the Associated Press and tweeted that President Obama had been attacked. Algorithms perceived a potential crisis and reacted instantly, causing the S&P 500 to lose $130 billion in “value.”

These days over 80 per cent of stock trading on Western financial markets is done by computer algorithms that make decisions in nanoseconds as we’ve discussed previously. These decisions to trade result not from human intuition (let alone discernments of such fuddy-duddy things such as “value”), but from the “logic” embedded in these algorithms; as they feed upon a variety of information sources as inputs, they output decisions of what to buy and sell. It is becoming more apparent that the Internet has been wired as an input for these decisions. For example, the BBC reports that Google searches can predict stock market decisions. Indeed, United Airlines lost most of its stock value in 2008 when the Google News aggregator mistakenly categorized a six-year old “news” story as current. The story discussed the insolvency of the airline – long resolved – so investors or more likely machines reacted promptly by dumping its stock. More recently, hackers breached the Twitter account of the Associated Press and tweeted that President Obama had been attacked. Algorithms perceived a potential crisis and reacted instantly, causing the S&P 500 to lose $130 billion in “value.”

Firms increasingly trade in selling access to this future. Companies like Recorded Future and Palantir now mine the data that the Internet so freely provides and sells these predictions. Recorded Futures, for example, sells its products with the promise to “unlock the predictive power of the web.” While these firms might differ in their algorithmic systems, they all depend on access to the standing reserve of the Web.

The horizons of possibility that stem from the world of algorithmic trading were prefigured by William Gibson, who ends his most recent novel Zero History with antagonist turned protagonist Hubertus Bigend able to predict the future using what he calls the “order flow:”

It’s the aggregate of all the orders in the market. Everything anyone is about to buy or sell, all of it. Stocks, bonds, gold, anything. If I understood him, that information exists, at any given moment, but there’s no aggregator. It exists, constantly, but is unknowable. If someone were able to aggregate that, the market would cease to be real… Because the market is the inability to aggregate the order flow at any given moment.

In the book’s final pages, Bigend confesses that he needs only the briefest lead on the present – “seven seconds, in most cases” – to construct his desired financial future. Knowing “seven seconds” ahead is all anyone needs to eke out a profit. As both the fictional Bigend and today’s very real army of stock trading bots attest, the value of the Internet is to render accessible the data companies need to know the future with greater certainty than ever before. Bigend wins in the end because he has exclusive access to the order flow.

New Digital Divides?

The order flow demonstrates the digital divides being created by the Internet as simulation machine. A divide emerges between those included and excluded from participating in the future of preemption and prediction. Those who are excluded no longer lack information, but lack input in the algorithmically-driven future-making.

Though a divide exists between those qualified as inputs and those filtered out as noise, another divide involves what (more so than who) has access to the order flow – or what Twitter calls the “firehose” – in the first place. Access to the firehose is one critical questions about Big Data raised by danah boyd and Kate Crawford. A lack of access to algorithmic control leads to a lack of on-and off-line agential control insofar as the Internet’s end users – we the public – lack access to the present: to our own flows of data. The order flow is too valuable or too dangerous to be entirely public.

It follows that social and financial exclusion and asymmetries of power will intensify as the Internet succeeds in making its algorithmically-sorted simulation “real.” Each iteration of the Web further disavows uncertainty, contributing to an “evidence-based” and data-driven simulation that, increasingly, will overcode our everyday lives. Those inputs that cannot be aggregated will be removed or ignored. There is little hope nor need for the system to self-correct should things to “wrong.” Those invested in the simulation do not worry about the outcome as much as they do with the probability of its realization. A future that is unequal and knowable is better than an unknowable – or uncertain – future concocted with freedom, equality, and flourishing as its goal.

Embracing the New Trickster: Vacoules of Non-Communication and Other Circuit Breakers

Our simulated future requires new tactics of resistance. In this regard we’re inspired – as are many others – by the work of Gilles Deleuze. Deleuze once presciently wrote:

Maybe speech and communication have been corrupted. They’re thoroughly permeated by money—and not by accident but by their very nature. We’ve got to hijack speech. Creating has always been something different from communicating. The key thing may be to create vacuoles of noncommunication, circuit breakers, so we can elude control.

These are the tasks of the modern resistance. The transitory British collective known as The Deterritorial Support Group also draws on Deleuze to offer a perfect example of the vacuole of non-communication in their writings on Internet memes:

We didn’t spread any such rumour – we hijacked an existing meme with enormous potential. Internet memes originally functioned as a subject of the Internet hate machine – operating in a totally amoral fashion, where achieving “lulz” was the only aim. Within the past few years, memes have started to take on a totally different function, and what would have been perceived as a slightly pathetic bunch of bastards in the past are today global players in undermining international relations – namely in the complex interaction of Wikileaks with Anonymous, 4chan and other online hooligans.

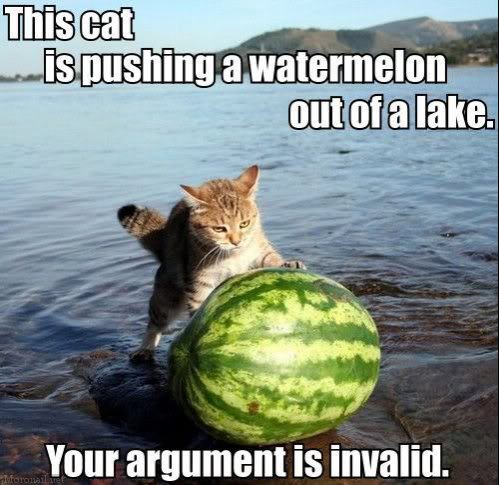

There’s no coherent analysis to be had of this at the moment. However “lulz” also demonstrate their potential as part of a policy of radical refusal to the demands of capital. When asked by liberals “Do you condone or condemn the violence of the Black Bloc?” We can only reply in unison “This cat is pushing a watermelon out of a lake. Your premise is invalid”.

Today it seems to make more sense to make no sense since logical forms of antagonistic communication are still inputs that feed bots making stock market decisions. Gawker’s Adrien Chen, referencing Harmony Korine’s absurd(ist) Reddit AMA echoes this sentiment when he writes: “It’s unclear if he’s on or off something but his typo-and-non-sequitur-filled performance in his AMA today was inspired. The only way to resist the insufferable PR machine is clogging it with pure nonsense [emphasis added].” Nonsense might be the new sabotage.

Today it seems to make more sense to make no sense since logical forms of antagonistic communication are still inputs that feed bots making stock market decisions. Gawker’s Adrien Chen, referencing Harmony Korine’s absurd(ist) Reddit AMA echoes this sentiment when he writes: “It’s unclear if he’s on or off something but his typo-and-non-sequitur-filled performance in his AMA today was inspired. The only way to resist the insufferable PR machine is clogging it with pure nonsense [emphasis added].” Nonsense might be the new sabotage.

In fact, nonsense clarifies what matters about Internet tricksters like LulzSec or the hordes of trolls that spew forth from sites like 4chan. The creator of 4chan, Christopher Poole, suggested that 4chan matters because it allows for an anonymity not possible on platforms like Facebook. As Jessica Beyer attests in the conclusion of her dissertation and forthcoming book, the design of the 4chan platform is radical because it does not lend itself to data mining and monitoring. Content is unfiltered, ephemeral and too noisy to make predictions. In other words, 4chan’s refusal to archive itself results in an online social space that consciously resists simulation. And as we’ve elsewhere observed, the culture of 4chan works alongside its code to confound visitors, both human and nonhuman. The community actively resists categorization, and the only memes or behaviours that cohere are those with sufficient room for ambiguity and playful interpretation.

4chan users often deploy their penchant for nonsense in a tactical fashion. The constant stream of obscenity, inside jokes and non sequiturs they insist upon has rendered the site one of the few online forums not able to turn a profit. Despite a base of 22 million monthly users, Poole has struggled to find advertisers willing to have their products appear next to Goatse (he also spoke at a 2012 conference centred on mining online creativity and bragged that 4chan loses money). Outside the confines of the site, trolls lurk under the Internet’s myriad bridges, circulating false news stories, hijacking online polls and marketing campaigns, and generally making online information just a little less reliable than its non-simulated cousin. And when those kernels of spurious data get fed into the algorithmic feedback loop, who knows what lulzy futures they may give rise to?

This is hardly specific to 4chan. In her work on Second Life, Burcu Bakioglu describes how “griefers” rejected the commodification of their virtual world through in-game raids and hacks that spawned hordes of offensive sprites, ranging from flying pensises to swastikas. This type of “grief-play” jammed Second Life’s signification system, literally causing sims to crash, and disrupted Linden Lab’s virtual economy. Such actions suggest new horizons of possibility for “acting out” because trolls and griefers interfere with the logics driving simulation and computational sense making.

Pranks can also reveal the algorithmic systems at work in our media. To mine a homespun example, we can look at what happened when Canadian Prime Minister Stephen Harper choked on a hash brown. His Members of Parliament appeared convinced of their leader’s fate, as one by one they posted the news item to Twitter. At least it seemed that way until their party admitted a hack of its website circulated the fake story. More than embarrass the reigning party, the hackers revealed the frailty of their communication apparatus. The Hash Brown incident would have been dismissed as hearsay, but instead appeared credible because party members had posted it to Twitter – or rather algorithms posted the story because their Twitter accounts were configured to automatically forward news items from the party’s central site. Indeed, these days the tactical placement of online jokes and ridiculousness (let alone false news items about stocks, terrorism, or disasters) can function as a form of research on algorithmic media. After all, what is the trick but “a method by which a stranger or underling can enter the game, change its rules, and win a piece of the action” (Hyde, 1998, p. 204).

These resistances, however, run the risk of just being filtered out. We can imagine in the future that 4chan might either be removed to ensure that an Internet with real names offers better simulation-supporting information or just ignored altogether by algorithms programmed to avoid websites that traffic in troublemakers, perverts, and weirdos. Conversely, the potential of the trickster could be co-opted, and used to train the machine. By trying to predict a future of nonsense are we helping the machine learn? Can the right algorithm data-mine nonsense and render it predictable? The question remains whether there can be any popular resistance at all to today’s – and tomorrow’s – algorithmically-modulated simulation. Will people join in a nonsensical refrain? Does free communication matter enough to publics that they will stop communicating (or at least, stop making sense)? And if the Internet fails to simulate effectively, will the externalities of free communication endure?

About the Authors

Dr. Fenwick McKelvey is a SSHRC Postdoctoral Fellow and Visiting Scholar at the Department of Communication at the University of Washington. His postdoctoral project entitled Programming the Vote traces the early history of computers in politics. He will start as an Assistant Professor in the Department of Communication Studies. He completed his MA (2008) and his PhD (2012) in the joint program in Communication and Culture of York University and Ryerson University. His doctoral work was supported by a SSHRC Joseph-Armand Bombardier Canada Graduate Scholarship. Fenwick investigates how algorithms afford new forms of control in digital media. He explores this issue of control through studies of Internet routing algorithms and political campaign management software. He is also co-author of The Permanent Campaign: New Media, New Politics with Dr. Elmer and Dr. Langlois. He is presently developing a book manuscript entitled Media Demons: Algorithms, Internet Routing and Time. His personal website page is: http://www.fenwickmckelvey.com/.

Dr. Matthew Tiessen is a SSHRC Postdoctoral Research Fellow at the Infoscape Research Lab in the Faculty of Communication and Design at Ryerson University. He teaches and publishes in the area of technology studies, digital culture, contemporary theory, and visual culture. Matthew’s doctoral research on affect, agency, and creativity was funded by an Izaak Walton Killam Memorial Scholarship and a SSHRC Doctoral Fellowship. Dr. Tiessen is currently under contract to write a book on “apps,” affect, and gamification with U of Toronto Press. His research is featured in academic journals and anthologies such as: Theory, Culture & Society, Cultural Studies<=>Critical Methodologies, Surveillance & Society as well as Space and Culture. He is also an exhibiting artist. Matthew’s homepage can be found here:https://manu.rcc.ryerson.ca/~mtiessen/.

Luke Simcoe, MA is a journalist and independent scholar. He holds a MA in the joint program in Communication and Culture of York University and Ryerson University (2012). His first major research paper explored how participants on the popular 4chan message board used carnivalesque humour and memes to foster a collective identity and articulate a political orientation towards the internet—summed up by the phrase “the internet is serious business”. He writes at: http://lukesimcoe.tumblr.com/.