Many of us who study new media, whether we do so experimentally or qualitatively, our data big or small, are tracking the unfolding debate about the Facebook “emotional contagion” study, published recently in the Proceedings of the National Academies of Science. The research, by Kramer, Guillory, and Hancock, argued that small shifts in the emotions of those around us can shift our own moods, even online. To prove this experimentally, they made alterations in the News Feeds of 310,000 Facebook users, excluding a handful of status updates from friends that had either happy words or sad words, and measuring what those users subsequently posted for its emotional content. A matching number of users had posts left out of their News Feeds, but randomly selected, in order to serve as control groups. The lead author is a data scientist at Facebook, while the others have academic appointments at UCSF and Cornell University.

Many of us who study new media, whether we do so experimentally or qualitatively, our data big or small, are tracking the unfolding debate about the Facebook “emotional contagion” study, published recently in the Proceedings of the National Academies of Science. The research, by Kramer, Guillory, and Hancock, argued that small shifts in the emotions of those around us can shift our own moods, even online. To prove this experimentally, they made alterations in the News Feeds of 310,000 Facebook users, excluding a handful of status updates from friends that had either happy words or sad words, and measuring what those users subsequently posted for its emotional content. A matching number of users had posts left out of their News Feeds, but randomly selected, in order to serve as control groups. The lead author is a data scientist at Facebook, while the others have academic appointments at UCSF and Cornell University.

I have been a bit reluctant to speak about this, as (full disclosure) I am both a colleague and friend of one of the co-authors of this study; Cornell is my home institution. And, I’m currently a visiting scholar at Microsoft Research, though I don’t conduct data science and am not on specific research projects for the Microsoft Corporation. So I’m going to leave the debates about ethics and methods in other, capable hands. (Press coverage: Forbes 1, 2, 3; Atlantic 1, 2, 3, 4, Chronicle of Higher Ed 1; Slate 1; NY Times 1, 2; WSJ 1, 2, 3; Guardian 1, 2, 3. Academic comments: Grimmelmann, Tufekci, Crawford, boyd, Peterson, Selinger and Hartzog, Solove, Lanier, Vertesi.) I will say that social science has moved into uncharted waters in the last decade, from the embrace of computational social scientific techniques, to the use of social media as experimental data stations, to new kinds of collaborations between university researchers and the information technology industry. It’s not surprising to me that we find it necessary to raise concerns about how that research should work, and look for clearer ethical guidelines when social media users are also “human subjects.” In many ways I think this piece of research happened to fall into a bigger moment of reckoning about computational social science that has been coming for a long time — and we have a responsibility to take up these questions at this moment.

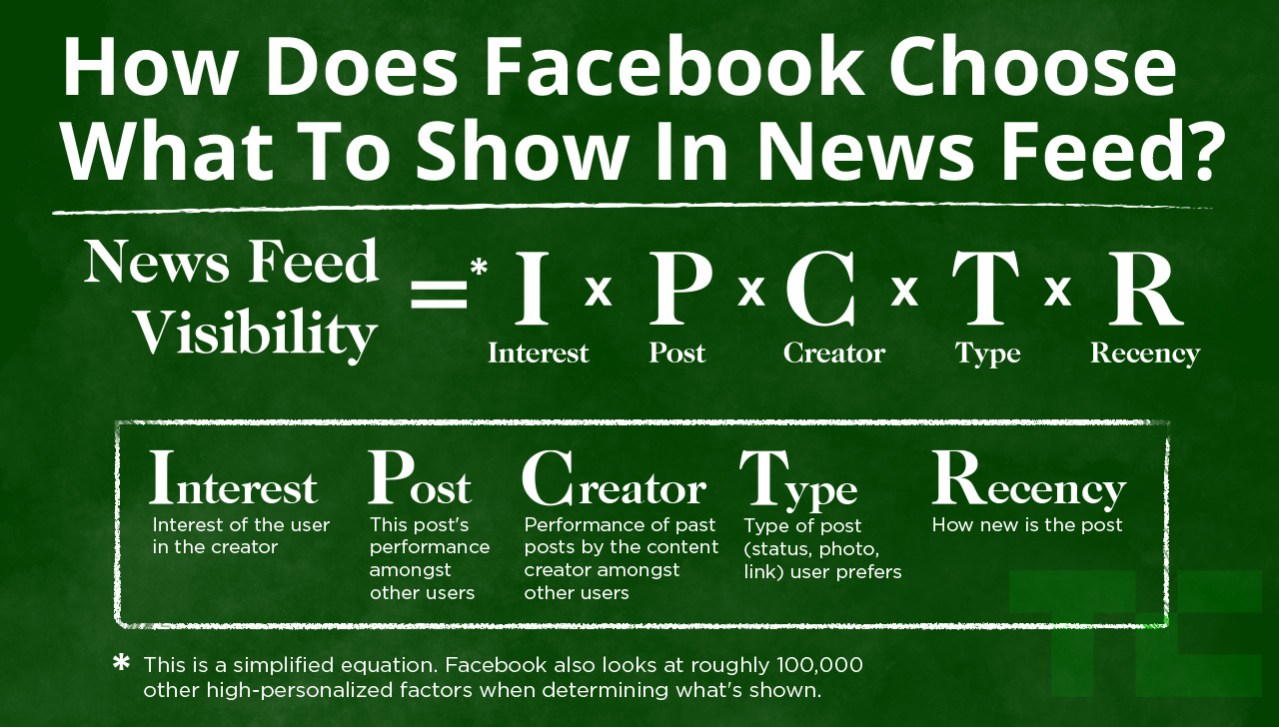

But a key issue, both in the research and in the reaction to it, is about Facebook and how it algorithmically curates our social connections, sometimes in the name of research and innovation, but also in the regular provision of Facebook’s service. And that I do have an opinion about. The researchers depended on the fact that Facebook already curates your News Feed, in myriad ways. When you log onto Facebook, the posts you’re immediately shown at the top of the News Feed are not every post from your friends in reverse chronological order. Of course Facebook has the technical ability to do this, and it would in many ways be simpler. But their worry is that users will be inundated with relatively uninteresting (but recent) posts, will not scroll down far enough to find the few among them that are engaging, and will eventually quit the service. So they’ve tailored their “EdgeRank” algorithm to consider, for each status update from each friend you might receive, not only when it was posted (more recent is better) but other factors, including how regularly you interact with that user (e.g. liking or commenting on their posts), how popular they are on the service and among your mutual friends, and so forth. A post with a high rating will show up, a post with a lower rating will not.

So, for the purposes of this study, it was easy to also factor in a numerical count of happy or sad emotion words in the posts as well, and use that as an experimental variable. The fact that this algorithm does what it does also provided legal justification for the research: that Facebook curates all users’ data is already part of the site’s Terms of Service and its Data Use Policy, so it is within their rights to make whatever adjustments they want. And the Institutional Review Board at Cornell did not see a reason to even consider this as a human subjects issue: all that the Cornell researchers got was the statistical data produced from this manipulation, manipulations that are a normal part of the inner workings of Facebook.

Defenders of the research (1, 2, 3), including Facebook, have pointed to this as a reason to dismiss what they see as an overreaction. This takes a couple of forms, not entirely consistent with each other: Facebook curates users’ News Feed anyway, it’s within their right to do so. Facebook curates users’ News Feed anyway, probably already on factors such as emotion. Facebook curates users’ News Feed anyway, and needs to understand how to do so by engaging in all sorts of A/B testing, which this was an example of. Facebook curates users’ News Feed anyway, get over it. All of these imply that it’s simply naive to think of this research as a “manipulation” of an otherwise untouched list; your News Feed is a construction, built from some of the posts directed to you, according to any number of constantly shifting algorithmic criteria. This was just one more construction. Those who are upset about this research are, according to its defenders, just ignorant of the realities of Facebook and its algorithm.

More and more of our culture is curated algorithmically; Facebook is a prime example, though certainly not the only one. But it’s easy for those of us who pay a lot of attention to how social media platforms work, engineers and observers alike, to forget how unfamiliar that is. I think, among the population of Facebook users — more than a billion people — there’s a huge range of awareness about these algorithms and their influence. And I don’t just mean that there are some poor saps who still think that Facebook delivers every post. In fact, there certainly are many, many Facebook users who still don’t know they’re receiving a curated subset of their friends’ posts, despite the fact that this has been true, and “known,” for some time. But it’s more than that. Many users know that they get some subset of their friends’ posts, but don’t understand the criteria at work. Many know, but do not think about it much as they use Facebook in any particular moment. Many know, and think they understand the criteria, but are mistaken. Just because we live with Facebook’s algorithm doesn’t mean we fully understand it. And even for those who know that Facebook curates our News Feeds algorithmically, it’s difficult as a culture to get beyond some very old and deeply sedimented ways to think about how information gets to us.

The public reaction to this research is proof of these persistent beliefs — a collective groan from our society as it adjusts to a culture that is algorithmically organized. Because social media, and Facebook most of all, truly violates a century-old distinction we know very well, between what were two, distinct kinds of information services. On the one hand, we had “trusted interpersonal information conduits” — the telephone companies, the post office. Users gave them information aimed for others and the service was entrusted to deliver that information. We expected them not to curate or even monitor that content, in fact we made it illegal to do otherwise; we expected that our communication would be delivered, for a fee, and we understood the service as the commodity, not the information it conveyed. On the other hand, we had “media content producers” — radio, film, magazines, newspapers, television, video games — where the entertainment they made for us felt like the commodity we paid for (sometimes with money, sometimes with our attention to ads), and it was designed to be as gripping as possible. We knew that producers made careful selections based on appealing to us as audiences, and deliberately played on our emotions as part of their design. We were not surprised that a sitcom was designed to be funny, even that the network might conduct focus group research to decide which ending was funnier (A/B testing?). But we would be surprised, outraged, to find out that the post office delivered only some of the letters addressed to us, in order to give us the most emotionally engaging mail experience.

And Facebook is complicit in this confusion, as they often present themselves as a trusted information conduit, and have been oblique about the way they curate our content into their commodity. If Facebook promised “the BEST of what your friends have to say,” then we might have to acknowledge that their selection process is and should be designed, tested, improved. That’s where this research seems problematic to some, because it is submerged in the mechanical workings of the News Feed, a system that still seems to promise to merely deliver what your friends are saying and doing. The gaming of that delivery, be it for “making the best service” or for “research,” is still a tactic that takes cover under its promise of mere delivery. Facebook has helped create the gap between expectation and reality that it has currently fallen into.

That to me is what bothers people, about this research and about a lot of what Facebook does. I don’t think it is merely naive users not understanding that Facebook tweaks its algorithm, or that people are just souring on Facebook as a service. I think it’s an increasing, and increasingly apparent, ambivalence about what it is, and its divergence from what we think it is. Despite the cries of those most familiar with their workings, it takes a while, years, for a culture to adjust itself to the subtle workings of a new information system, and to stop expecting of it what tradition systems provided.

For each form of media, we as a public can raise concerns about its influence. For the telephone system, it was about whether they were providing service fairly and universally: a conduit’s promise is that all users will have the opportunity to connect, and as a nation we forced the telephone system to ensure universal service, even when it wasn’t profitable. Their preferred design was acceptable only until it ran up against a competing concern: public access. For media content, we have little concern about being “emotionally manipulated” by a sitcom or a tear-jerker drama. But we do worry about that kind of emotional manipulation in news, like the fear mongering of cable news pundits. Here again, their preferred design is acceptable until it runs up against a competing concern: a journalistic obligation to the public interest. So what is the competing interest here? What kind of interventions are acceptable in an algorithmically curated platform, and what competing concern do they run up against?

Is it naive to continue to want Facebook to be a trusted information conduit? Is it too late? Maybe so. Though I think there is still a different obligation when you’re delivering the communication of others — an obligation Facebook has increasingly foregone. Some of the discussion of this research suggests that the competing concern here is science: that the ethics are different because this manipulation was presented as scientific discovery, a knowledge project for which we have different standards and obligations. But, frankly, that’s a troublingly narrow view. Just because this algorithmic manipulation came to light because it was published as science doesn’t mean that it was the science that was the problem. The responsibility may extend well beyond, to Facebook’s fundamental practices.

Is there any room for a public interest concern, like for journalism? Some have argued that Facebook and other social media are now a kind of quasi public spheres. They not only serve our desire to interact with others socially, they are also important venues for public engagement and debate. The research on emotional contagion was conducted during the week of January 11-18, 2012. What was going on then, not just in the emotional lives of these users, but in the world around them? There was ongoing violence and protest in Syria. The Costa Concordia cruise ship ran aground in the Mediterranean. The U.S. Republican party was in the midst of its nomination process: John Huntsman dropped out of the race this week, and Rick Perry the day after. January 18th was the SOPA protest blackout day, something that was hotly (emotionally?) debated during the preceding week. Social media platforms like Facebook and Twitter were in many ways the primary venues for activism and broader discussion of this particular issue. Whether or not the posts that were excluded by this research pertained to any of these topics, there’s a bigger question at hand: does Facebook have an obligation to be fair-minded, or impartial, or representative, or exhaustive, in its selection of posts that address public concerns?

The answers to these questions, I believe, are not clear. And this goes well beyond one research study, it is a much broader question about Facebook’s responsibility. But the intense response to this research, on the part of press, academics, and Facebook users, should speak to them. Maybe we latch onto specific incidents like a research intervention, maybe we grab onto scary bogeymen like the NSA, maybe we get hooked on critical angles on the problem like the debate about “free labor,” maybe we lash out only when the opportunity is provided like when Facebook tries to use our posts as advertising. But together, I think these represent a deeper discomfort about an information environment where the content is ours but the selection is theirs.